|

Table of Contents:

|

||||

Editor: Michael Orr

Technical Editor: Heather Stern

Senior Contributing Editor: Jim Dennis

Contributing Editors: Ben Okopnik, Dan Wilder, Don Marti

| TWDT 1 (gzipped text file) TWDT 2 (HTML file) are files containing the entire issue: one in text format, one in HTML. They are provided strictly as a way to save the contents as one file for later printing in the format of your choice; there is no guarantee of working links in the HTML version. |

|||

![[tm]](../gx/tm.gif) , http://www.linuxgazette.com/ , http://www.linuxgazette.com/This page maintained by the Editor of Linux Gazette, Copyright © 1996-2001 Specialized Systems Consultants, Inc. |

|||

The Mailbag

The MailbagSend tech-support questions, answers and article ideas to The Answer Gang <>. Other mail (including questions or comments about the Gazette itself) should go to <>. All material sent to either of these addresses will be considered for publication in the next issue. Please send answers to the original querent too, so that s/he can get the answer without waiting for the next issue.

Unanswered questions might appear here. Questions with answers--or answers only--appear in The Answer Gang, 2-Cent Tips, or here, depending on their content. There is no guarantee that questions will ever be answered, especially if not related to Linux.

Before asking a question, please check the Linux Gazette FAQ to see if it has been answered there.

ip_always_defrag missing from kernel 2.4?

ip_always_defrag missing from kernel 2.4?In its place there appear to be three new parameters:

ipfrag_high_thresh - INTEGER

Maximum memory used to reassemble IP fragments. When ipfrag_high_thresh bytes of memory is allocated for this purpose, the fragment handler will toss packets until ipfrag_low_thresh is reached.

ipfrag_low_thresh - INTEGER See ipfrag_high_thresh ipfrag_time - INTEGER Time in seconds to keep an IP fragment in memory.

Any idea what are 'reasonable' settings?

What settings will mimic, as closely as possible, the behavior of ip_always_defrag?

-- James Garrison

'Spanking New' Distribution ships with 'development' compiler

'Spanking New' Distribution ships with 'development' compilerHello TAG-Team,

I just installed Mandrake 8.0. I intend to use gcc (the compiler) quite a bit to recompile lots of software. Mandrake 8.0 ships with a development version of gcc (2.96) but I really want to stick with 2.95.x for stability/portability. How can I remove the development-gcc and put an older version in without breaking the system. I know there must be a way to do this via RPM, but it eludes me and I was seriously frightened to rebuild from an unmanaged source tarball.

-Marc Doughty

Printer memory overflows

Printer memory overflowsI just got an older laser printer and it works very well with a HP LJ III printcap setting, except it has only 1 MB of RAM. This works well until I try to print a PDF, then it runs out of printer memory about 7/8 of the way through the page.

Is there some way to tell ghostscript/lpd to go easy on the thing? I was able to print them fine on my inkjet, and it definitely doesn't have 1 MB of memory installed...

Oh yes, the same .PDF prints fine on the Evil(tm) Operating System.

Thanks.

-- Jonathan Markevich

What happened to e2compr?

What happened to e2compr?I run Linux 2.2.14 on a laptop with a by now small hard drive. To put some huge files (such as graphics in the middle of editing) on it, I installed the e2compr patch to the kernel. I'd like to upgrade to 2.4, but the patch doesn't seem to be available for 2.4. Anybody know what happened to it?

phma

There's at least one new compressed filesystem in the new kernels, but I'm not sure that the one I'm thinking of is realy ext2 compatible. Still, you might not need that. There's a curious new style of ramdisk available too. Anyone who knows more is welcome to chime in ... -- Heather

Linux fashion

Linux fashionGreetings

I'm reading the gazette for a wile now, and never found an answer to my simple question Where can I found Baby's clothes related to Linux? With Linux logo or something. I want my baby to be cool (and to use Linux) .....

thanks

Erez Avraham

It looks like The Emporium (a UK company) sells child size sweatshirts but I don't know what sizes are good for babies. Comments welcome. If you are a commercial entity which has 'em, let us know, and we'll put you in News Bytes. -- Heather

IRC channels for IPChains

IRC channels for IPChainsOne more quickie: do you know of any IRC channels where I can get some IPChains questions answered? I'm trying to put in a firewall for a client using a script that has worked very well for me for several years (used to be IPMasq, but has been modified for IPChains) but just dies now and for the life of me I can't figure out why.

The only difference in this case that I can see is that the DSL line it sits behind is running Ethernet bridging (PacBell DSL) over an Alcatel modem and the script has been running behind a cable modem (no bridging); but why is that such a "deal-killer"?

Anyway, thanks again!

RAB

Roy Bettle

Tape Backup

Tape BackupHi Sir,

Recently,in the pipeline of converting my NT server to a Redhat 7.1 Linux Server with Samba on it. But the problem is I'm using a a HP SureStoreDAT 40 tape Drive. And there is nowhere I can find the driver for this device(including the HP and Redhat website). I really hope that I can realise my dream of setting up 2 Linux server(with UPS and backup tape driver on) at my place. I don't want to scrap the whole project halfway. Does you knows where I can get the above driver or a generic driver well do? Or is there any recommendations on a almost similar tape drive that is well supported with Redhat? If I can't succeed then I 'll have to go to Windows2000 with all those expensive licenses. Thanks

warmest regards,

Desmond Lim

Article idea

Article ideaYes, Gentle Readers, this is also in TAG this month, but folks looking for article ideas are encouraged to take this and run with it. For that matter, we have a PostgresSQL related article this month (nielsen.html) but it would be fun to have an article comparing PostgresSQL to MySQL. -- Heather

I would love to see an article about making sense of MySQL.Perhaps some basic commands, and how to do something useful with it.

Here are some basic commands. As far as "something useful", what would you consider useful?

I have found a lot of articles either lack basic usage and administration or it it fails to show how to put it all together and have somehing useful come out of it.

The 'mysql' command is your friend. You can practice entering commands with it, run ad-hoc queries, build and modify your tables, and test your ideas before coding them into a program. Let's look at one of the sample tables that come with MySQL in the 'test' database. First we'll see the names of the tables, then look at the structure of the TEAM table, then count how many records it contains, then display a few fields.

$ mysql test Reading table information for completion of table and column names You can turn off this feature to get a quicker startup with -A Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 1325 to server version: 3.23.35-log Type 'help;' or '\h' for help. Type '\c' to clear the buffer mysql> show tables; +------------------+ | Tables_in_test | +------------------+ | COLORS | | TEAM | +------------------+ 2 rows in set (0.00 sec) mysql> describe TEAM; +------------+---------------+------+-----+---------+----------------+ | Field | Type | Null | Key | Default | Extra | +------------+---------------+------+-----+---------+----------------+ | MEMBER_ID | int(11) | | PRI | NULL | auto_increment | | FIRST_NAME | varchar(32) | | | | | | LAST_NAME | varchar(32) | | | | | | REMARK | varchar(64) | | | | | | FAV_COLOR | varchar(32) | | MUL | | | | LAST_DATE | timestamp(14) | YES | MUL | NULL | | | OPEN_DATE | timestamp(14) | YES | MUL | NULL | | +------------+---------------+------+-----+---------+----------------+ 7 rows in set (0.00 sec) mysql> select count(*) from TEAM; +----------+ | count(*) | +----------+ | 4 | +----------+ 1 row in set (0.00 sec) mysql> select MEMBER_ID, REMARK, LAST_DATE from TEAM; +-----------+-----------------+----------------+ | MEMBER_ID | REMARK | LAST_DATE | +-----------+-----------------+----------------+ | 1 | Techno Needy | 20000508105403 | | 2 | Meticulous Nick | 20000508105403 | | 3 | The Data Diva | 20000508105403 | | 4 | The Logic Bunny | 20000508105403 | +-----------+-----------------+----------------+ 4 rows in set (0.01 sec)

Say we've forgotten the full name of that Diva person:

mysql> select MEMBER_ID, FIRST_NAME, LAST_NAME, REMARK -> from TEAM -> where REMARK LIKE "%Diva%"; +-----------+------------+-----------+---------------+ | MEMBER_ID | FIRST_NAME | LAST_NAME | REMARK | +-----------+------------+-----------+---------------+ | 3 | Brittney | McChristy | The Data Diva | +-----------+------------+-----------+---------------+ 1 row in set (0.01 sec)

What if Brittney McChristy changes her last name to Spears?

mysql> update TEAM set LAST_NAME='Spears' WHERE MEMBER_ID=3; Query OK, 1 row affected (0.01 sec) mysql> select MEMBER_ID, FIRST_NAME, LAST_NAME, LAST_DATE from TEAM -> where MEMBER_ID=3; +-----------+------------+-----------+----------------+ | MEMBER_ID | FIRST_NAME | LAST_NAME | LAST_DATE | +-----------+------------+-----------+----------------+ | 3 | Brittney | Spears | 20010515134528 | +-----------+------------+-----------+----------------+ 1 row in set (0.00 sec)

Since LAST_DATE is the first TIMESTAMP field in the table, it's automatically reset to the current time whenever you make a change.

Now let's look at all the players whose favorite color is blue, listing the most recently-changed one first.

mysql> select MEMBER_ID, FIRST_NAME, LAST_NAME, FAV_COLOR, LAST_DATE from TEAM -> where FAV_COLOR = 'blue' -> order by LAST_DATE desc; +-----------+------------+-----------+-----------+----------------+ | MEMBER_ID | FIRST_NAME | LAST_NAME | FAV_COLOR | LAST_DATE | +-----------+------------+-----------+-----------+----------------+ | 3 | Brittney | Spears | blue | 20010515134528 | | 2 | Nick | Borders | blue | 20000508105403 | +-----------+------------+-----------+-----------+----------------+ 2 rows in set (0.00 sec)

Now let's create a table TEAM2 with a similar structure as TEAM.

mysql> create table TEAM2 ( -> MEMBER_ID int(11) not null auto_increment primary key, -> FIRST_NAME varchar(32) not null, -> LAST_NAME varchar(32) not null, -> REMARK varchar(64) not null, -> FAV_COLOR varchar(32) not null, -> LAST_DATE timestamp, -> OPEN_DATE timestamp); Query OK, 0 rows affected (0.01 sec) mysql> describe TEAM2; +------------+---------------+------+-----+---------+----------------+ | Field | Type | Null | Key | Default | Extra | +------------+---------------+------+-----+---------+----------------+ | MEMBER_ID | int(11) | | PRI | NULL | auto_increment | | FIRST_NAME | varchar(32) | | | | | | LAST_NAME | varchar(32) | | | | | | REMARK | varchar(64) | | | | | | FAV_COLOR | varchar(32) | | | | | | LAST_DATE | timestamp(14) | YES | | NULL | | | OPEN_DATE | timestamp(14) | YES | | NULL | | +------------+---------------+------+-----+---------+----------------+ 7 rows in set (0.00 sec)

Compare this with the TEAM decription above. They are identical (except for the multiple index we didn't create because this is a "simple" example).

Now, say you want to do a query in Python:

$ python

Python 1.6 (#1, Sep 5 2000, 17:46:48) [GCC 2.7.2.3] on linux2

Copyright (c) 1995-2000 Corporation for National Research Initiatives.

All Rights Reserved.

Copyright (c) 1991-1995 Stichting Mathematisch Centrum, Amsterdam.

All Rights Reserved.

>>> import MySQLdb

>>> conn = MySQLdb.connect(host='localhost', user='me', passwd='mypw', db='test')

>>> c = conn.cursor()

>>> c.execute("select MEMBER_ID, FIRST_NAME, LAST_NAME from TEAM")

4L

>>> records = c.fetchall()

>>> import pprint

>>> pprint.pprint(records)

((1L, 'Brad', 'Stec'),

(2L, 'Nick', 'Borders'),

(3L, 'Brittney', 'Spears'),

(4L, 'Fuzzy', 'Logic'))

Another approach is to have Python or a shell script write the SQL commands to a file and then run 'mysql' with its standard input coming from the file. Or in a shell script, pipe the command into mysql:

$ echo "select REMARK from TEAM" | mysql -t test +-----------------+ | REMARK | +-----------------+ | Techno Needy | | Meticulous Nick | | The Data Diva | | The Logic Bunny | +-----------------+

(The -t option tells MySQL to draw the table decorations even though it's running in batch mode. Add your MySQL username and password if requred.)

'mysqldump' prints a set of SQL commands which can recreate a table. This provides a simple way to backup and restore:

$ mysqldump --opt -u Username -pPassword test TEAM >/backups/team.sql $ mysql -u Username -pPassword test </backups/team.sql

This can be used for system backups, or for ad-hoc backups while you're designing an application or doing complex edits. (And it saves your butt if you accidentally forget the WHERE clause in an UPDATE statement and end up changing all records instead of just one!)

You can also do system backups by rsyncing or tarring the /var/lib/mysql/ directory. However, you run the risk that a table may be in the middle of an update. MySQL does have a command "LOCK TABLES the_table READ", but interspersing it with backup commands in Python/Perl/whatever is less convenient than mysqldump, and trying to do it in a shell script without running mysql as a coprocess is pretty difficult.

The only other maintenance operation is creating users and assigning access privileges. Study "GRANT and REVOKE syntax" (section 7.25) in the MySQL reference manual. I always have to reread this whenever I add a database. Generally you want a command like:

mysql> grant SELECT, INSERT, DELETE, UPDATE on test.TEAM to somebody -> identified by 'her_password'; Query OK, 0 rows affected (0.03 sec)

This will allow "somebody" to view and modify records but not to change the table structure. (I always alter tables as the MySQL root user.) To allow viewing and modifying of all current and future tables in datbase 'test', use "on test.*". To allow certain users access without a password, omit the "identified by 'her_password'" portion. To limit access according to the client's hostname, use 'to somebody@"%.mysite.com"'.

Remember that MySQL usernames have no relationship to login usernames.

To join multiple tables (MySQL is a "relational" DBMS after all), see "SELECT syntax" (section 7.11). Actually, all of chapter 7 is good to have around for reference. The MySQL manual is at http://www.mysql.com/doc/

-- Mike Orr

bash string manipulating

bash string manipulatingOn Thu, 10 May 2001, you wrote:

I realize that this question was quite old, but I just came across it while cleaning out my inbox. Here's a couple of quick suggestions:

Thanks very much, very useful.

First: don't use this sort of "psuedo array." If you want an array (perhaps an associative array, what PERL calls a "hash") then use an array. Korn shell supports associative arrays. Bash doesn't. With other shells, you'll have to check.

Not easy when you have to work with what is given ![]() Actually I ditched it all and rewrote the app in XML and XSLT

Actually I ditched it all and rewrote the app in XML and XSLT ![]()

///Peter

Linux in Africa

Linux in AfricaMartin is one of our authors.

Hi,

This is to inform the world of an idea we are playing with. I work for an 3rd world aid organisation and recently returned home from a trip to Dar es Salaam, Tanzania. One of the ideas I brought with me back was the wish of some of our member organisations to set up some kind of computer training in "rural" Tanzania.

The interest of computers, and computer aided training is great, the means of buying computers non-existant. So, the idea is to set up training centres using "second hand" computers. My idea is to have this equiped with Linux and Star Office which will be ideal in terms of priceing and stability - if not perhaps in trained staff.

All of this is of course only in a very early stage of planning, but we hope to go ahead with the project at the latest during next year. If anyone else has any experiences of similar projects I am interested in hearing from you!

Regards,

Martin Skjöldebrand CTO, Forum Syd The Swedish NGO Centre for Development Co-operation.

Your reply

Your replyThank you for your reply...I know I answered it already but at that point I had only seen what was written on the TAG site (board/whatever) which was very brief. Your email to me had not arrived at that point, so I didn't get much of a message from you -as you may? have gathered from my answer to it. Sorry about my email settings...I was sending from a machine which was only just set up and running on defaults which I hadn't looked at. (Or maybe it was the setup of the message board....perhaps I pressed a "include html tags" button or something , not thinking. I really can't remember). I'll pay more attention to it in future. As for your answer, thank you very much. It will help me in the future, I'm sure. I don't really know anyone who I can talk to about this sort of stuff (computers) so reading what I can find and filing away little tips like that is pretty much my sole reference source when things f up. I only found out that TAG even existed on the weekend, so maybe I'll write again sometime. A really useful site.

Thanks again and keep up the good work helping people.

All the best, Peter.

On Sun, May 06, 2001 at 06:12:32AM +0100, Peter P wrote:

Content-Type: text/html; charset=iso-8859-1

Don't do that, please. Sending e-mail in any format other than plain text lowers the chances of your question being answered. It's impolite ... "Bad signature" is, of course, a software-dependent error, but it seems to be a pretty standardized one: what it usually means is that something scribbled over the last couple of bytes of the first sector on the drive. ...

just so you know...

just so you know...That others have been helped by having this out there. thanks!

Submission: A tired Newbie attempts Linux

Submission: A tired Newbie attempts LinuxOf all the articles I have read on how wonderful Linux is, seldom have I seen any that [cynically] document how the average Windows user can go from mouse-clicking dweeb to Linux junkie. Perhaps such an article does not exist? Or, maybe those that made the jump to Linux have forgotten the hoops us Win-dweebs are still facing.

A few years back, when this giant Linux wave began to crest, I was working for a local Electronics Boutique (EB) store for a few hours a week. Microsoft was in the news almost daily, and as the lawsuit against it grinded close to a close, anything Linux faired very well in the stock market and in the software reviews, it seemed. Heck, even EB was begining to stock games for Linux, maybe this is the little OS that can make it after all. So, like others, I took the dip into Linux, bringing home a copy of RedHat and pretty much every version since.

< Buying Linux >

Perhaps the first thing to be forgotten about Linux versus Windows was "Hey, Linux is FREE". What someone forgot to tell the rest of the world was that its one helluva download that doesn't always like to finish. And, up here in the NorthEast (Maine specifically), broadband wasnt here, so your idea of a good download was a 5.3k connect on the 56k modem! So, off to the store and buy a copy for $30 or so bucks. Then, not more than 3 months later, another build is out! Off we go and spend another $30 bucks....and repeat this process a few more times to our current build. Hmmm, well, its cost me more than all my Microsoft updates, and the Windows Update button sure is vastly easier than the Linux equivalent(s).

So, the claim of FREE FREE FREE really isn't so....I've found other places that you can buy a CD copy cheaper but still, some money negates the FREE.

Many free software notables would stand firmly on the point that "free" in "free software" is not about money, it's about your ability to improve, debug, or even use these applications after their original vendor gives up on them, disappears, or even simply turns to other things.

On the flip side(s) of this coin (these dice?), there are some who say "some work negates the FREE" ... such as your note below ... and those for whom a "free download" (which is certainly available for most Linux variants) is really quite expensive. Thus the appearance and eventual success of companies pre-loading Linux. - Heather

< Install...I dare you...>

Linux this, Linux that...that's all we've heard. Microsoft is bad (say using the Napsater Baaad sound effect from cartoon portraying Lars Ulrich). So now we give it the go, and guess what? The Linux operating system that w anted so much to be different from Windows looks JUST LIKE IT. Now while I will concede it IS easy to jump into for a user like me, all the books I had seemed to point to the beauty of working in the shell.

And another favorite of mine, something I can't understand at all. Why doesn't Linux do the equivalent of a DOS PATH command? Newbie Me is trying to shutdown my system and I, armed with book, type "shutdown -h now" and am told 'command not found'. But wait, my book says...etc etc....and of course, I now know you have to wander into sbin to make things happen. Why such commands aren't pathed like DOS is beyond me....perhaps that's another HowTo that has eluded me.

<...and the adventure continues...>

And now, two years later, I'm pleased to inform you that I have three Linux machines on my network, two are DNS servers and the other acts as my TUCOWS mirror. The DNS Servers work great....their version of BIND was flawed and five days into service, they were hacked into. Its just not fair, is it? But, my local Linux Guru solved the problem with a newer version of BIND and he's been watching over the machines to date. While I am still trying to learn more, its a slow process for a WinDweeb. While others wait for their ship to come in, I'm hunting for that perfect HowTo to guide me into the halls of Linux Guru-Land.

Paul Bussiere

While Paul later noted that he meant this "tongue in cheek" ... meanwhile, The Answer Gang answered him (see this month's TAG). Still, Linux Gazette will cheerfully publish articles aiding the true Newbie have a little more fun with Linux. If you have tiny picce of his "WinDweeb-to-LinuxGuru-HOWTO" waiting in you, check out our author guidelines, and then let us know! -- Heather

GIF -> PNG

GIF -> PNGSoon, all GIF images in the back issues of LG will be converted to PNG or JPG format. If you have a graphical browser that doesn't display PNG images properly (like the ones in The Weekend Mechanic article), speak up now.

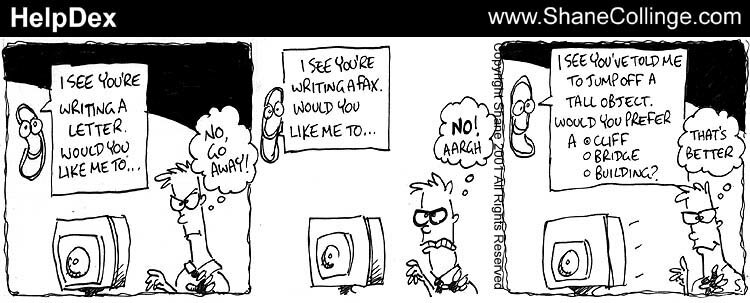

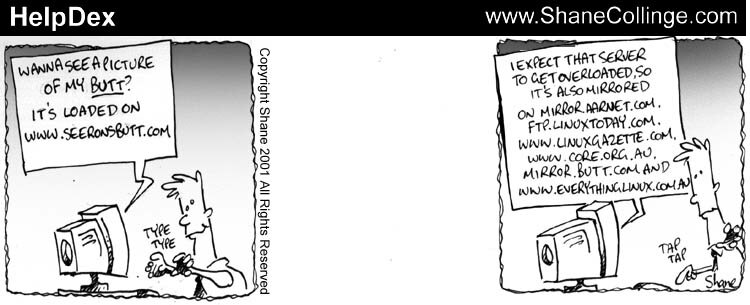

HelpDex is alive again!

HelpDex is alive again!Hi all,

I'll try to get right to the point. It's been two months since HelpDex finished up on LinuxToday.com. Since then, strips have only been appearing on www.LinuxGazette.com but nowhere else. A huge thanks to Mike from LG for this.

Can you spread the word for me please, the more I know I'm wanted the more likely I am to not be lazy ![]()

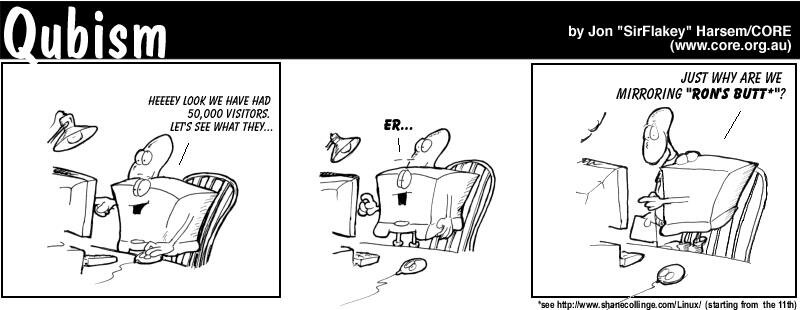

Oh, and there's also plenty of cool reading around. Check out Sir JH Flakey (http://www.core.org.au/cartoons.php) and of course, ANY book that comes out of O'Reilly ![]()

Shane

|

Contents: |

Submitters, send your News Bytes items in PLAIN TEXT format. Other formats may be rejected without reading. You have been warned! A one- or two-paragraph summary plus URL gets you a better announcement than an entire press release.

June 2001 Linux Journal

June 2001 Linux Journal

The June issue of Linux Journal focuses on world domination! No, actually it focuses on Internationalization & Emerging Markets, but it does have a cool cover picture of penguins erecting a Linux flag on the South Pole, with sixteen national flags in the background. Inside, there's a security article called "Paranoid Penguin", and a game review about taming monsters (Heroes of Might and Magic III).

Copies are available at newsstands now. Click here to view the table of contents, or here to subscribe.

All articles through December 1999 are available for public reading at http://www.linuxjournal.com/lj-issues/mags.html. Recent articles are available on-line for subscribers only at http://interactive.linuxjournal.com/.

May/June 2001 Embedded Linux Journal

May/June 2001 Embedded Linux Journal

The May/June issue of Embedded Linux Journal focuses on Cross Development and includes an overview of the second ELJ contest, based on the New Internet Computer (NIC). Subscriptions are free to qualified applicants in North America - sign up at http://embedded.linuxjournal.com/subscribe/.

Linux Journal's 2001 Buyer's Guide

Linux Journal's 2001 Buyer's Guide Linux Journal's 2001 Buyer's Guide is on sale now. It is the only comprehensive directory of Linux-related vendors and services, and is well-known as the definitive resource for Linux users. The guide includes a quick-reference chart to more than twenty Linux distributions, and with over 1600 listings, the guide is bigger than ever. For more information visit http://www.linuxjournal.com/lj-issues/issuebg2001/index.html.

Linux Journal's 2001 Buyer's Guide is on sale now. It is the only comprehensive directory of Linux-related vendors and services, and is well-known as the definitive resource for Linux users. The guide includes a quick-reference chart to more than twenty Linux distributions, and with over 1600 listings, the guide is bigger than ever. For more information visit http://www.linuxjournal.com/lj-issues/issuebg2001/index.html.

The guide is available on newsstands through August 1, 2001, and is available at the Linux Journal Store on-line at http://store.linuxjournal.com/.

Russian Distributions of Linux

Russian Distributions of LinuxThis spring two new distributions of Linux came out in Russia.

ASP-Linux is based on Red Hat 7.0, and has been modified to provide the Linux 2.4 kernel as an installation option and given a new installation program that can be run from Windows to partition and install ASPLinux as a dual-boot option on an existing Windows machine. The installation program is called EspressoDownload. Although ASPLinux has strong Singaporean connections, the development team is largely Russian.

ALT Linux is a decendant of Mandrake Linux. The creators of this distribution were previously known as IP Labs Linux Team, but now have firm of their own. ALT Linux are distributing a beta-version of their new server distribution ALT Linux Castle. This distribution will have crypt_blowfish as main password hashing algorithm and a chrooted environment for all base services. Download is available.

Caldera

CalderaCaldera Systems, Inc. has announced its completion of the acquisition of The Santa Cruz Operation, Inc. (SCO) Server Software and Professional Services divisions, UnixWare and OpenServer technologies. Caldera will now be able to offer "customized solutions through expanded professional services". Furthermore, Caldera has also acquired the assets of the WhatifLinux technology from Acrylis Inc. WhatifLinux technology provides Open Source users and system administrators with Internet-delivered tools and services for faster, more reliable software management.

Caldera has announced the launch of the Caldera Developer Network. Caldera developers, including members of the Open Source developer community, will have early access to UNIX and Linux technologies, allowing them to develop on UNIX, on Linux, or on a combined UNIX and Linux platform. This, plus the network's worldwide support and additional services, will enable members to build and develop their products with globally portable applications and get to market faster.

Mandrake

MandrakeMandrakeSoft have just announced the availability of their latest version, 8.0, in download format. This includes the newest version of the graphical environments KDE (2.1.1) and GNOME (1.4) featuring many new enhancements and applications. The 8.0 version promises to be the most powerful and complete Linux-Mandrake distribution while at the same time retaining the simplicity of installation and use that has made MandrakeSoft a recognized leader in the Linux field.

Rock

RockVersion 1.4 of ROCK Linux is ready to roll. This version is "intended" for production use, although the announcement warns that waiting another minor release or two would be prudent. ROCK is often referred to as being "harder to install" than other distributions. This is not strictly true. It aims to remain as close to the upstream software as possible rather than offering distribution bells and whistles. That said, a binary install is pretty easy, and a source install is not out of the question for an experienced user. ROCK does not contain an intrusive set of system administration utilities. The ROCK philosophy might be worth reading as would their guide.

SuSE

SuSESuSE Linux 7.2 will be available June 15th. It includes kernel 2.4.4, KDE 2.12 and Gnome 1.4.

In addition, 7.2 for Intel's Itanium-based (64-bit) systems will be released June 20th, but this version will be available directly from SuSE only.

Upcoming conferences and events

Upcoming conferences and eventsListings courtesy Linux Journal. See LJ's Events page for the latest goings-on.

|

|

|

| Linux Expo, Milan |

June 6-7, 2001 Milan, Italy http://www.linux-expo.com |

|

|

|

| Linux Expo Montréal |

June 13-14, 2001 Montréal, Canada http://www.linuxexpomontreal.com/EN/home/ |

|

|

|

| Open Source Handhelds Summit |

June 18-19, 2001 Austin, TX http://osdn.com/conferences/handhelds/ |

|

|

|

| USENIX Annual Technical Conference |

June 25-30, 2001 Boston, MA http://www.usenix.org/events/usenix01 |

|

|

|

| PC Expo |

June 26-29, 2001 New York, NY www.pcexpo.com |

|

|

|

| Internet World Summer |

July 10-12, 2001 Chicago, IL http://www.internetworld.com |

|

|

|

| O'Reilly Open Source Convention |

July 23-27, 2001 San Diego, CA http://conferences.oreilly.com |

|

|

|

| 10th USENIX Security Symposium |

August 13-17, 2001 Washington, D.C. http://www.usenix.org/events/sec01/ |

|

|

|

| HunTEC Technology Expo & Conference Hosted by Hunstville IEEE |

August 17-18, 2001 Huntsville, AL URL unkown at present |

|

|

|

| Computerfest |

August 25-26, 2001 Dayton, OH http://www.computerfest.com |

|

|

|

| LinuxWorld Conference & Expo |

August 27-30, 2001 San Francisco, CA http://www.linuxworldexpo.com |

|

|

|

| Red Hat TechWorld Brussels |

September 17-18, 2001 Brussels, Belgium http://www.europe.redhat.com/techworld |

|

|

|

| The O'Reilly Peer-to-Peer Conference |

September 17-20, 2001 Washington, DC http://conferences.oreilly.com/p2p/call_fall.html |

|

|

|

| Linux Lunacy Co-Produced by Linux Journal and Geek Cruises Send a Friend LJ and Enter to Win a Cruise! |

October 21-28, 2001 Eastern Caribbean http://www.geekcruises.com |

|

|

|

| LinuxWorld Conference & Expo |

October 30 - November 1, 2001 Frankfurt, Germany http://www.linuxworldexpo.de |

|

|

|

| 5th Annual Linux Showcase & Conference |

November 6-10, 2001 Oakland, CA http://www.linuxshowcase.org/ |

|

|

|

| Strictly e-Business Solutions Expo |

November 7-8, 2001 Houston, TX http://www.strictlyebusinessexpo.com |

|

|

|

| LINUX Business Expo Co-located with COMDEX |

November 12-16, 2001 Las Vegas, NV http://www.linuxbusinessexpo.com |

|

|

|

| 15th Systems Administration Conference/LISA 2001 |

December 2-7, 2001 San Diego, CA http://www.usenix.org/events/lisa2001 |

|

|

|

Linux@work Europe 2001 for FREE -- call for participation.

Linux@work Europe 2001 for FREE -- call for participation.For the third year, LogOn Technology Transfer will be organizing a series of Linux events throughout Europe called "Linux@work". Each "Linux@work" is composed by a conference and an exhibits. These 1-day, city-to-city events, will take place in several European venues in 2001. Among the keynote speakers: Jon "maddog" Hall, President Linux International and Robert J. Chassel, Executive Director Free Software Foundation. To register and for the full conference programs: http://www.ltt.de/linux_at_work.2001/.

Linux NetworX Expands Market Reach into Europe

Linux NetworX Expands Market Reach into EuropeLinux NetworX, a provider of Linux cluster computing solutions, has announced an international partner/distributor agreement with France-based Athena Global Services. Athena Global Services, a leading value-added distributor of new IT technologies in France, is the first authorized Linux NetworX distributor in Europe. The Linux NetworX newsletter has more details.

TeamLinux | explore New Interactive Kiosk Product Line

TeamLinux | explore New Interactive Kiosk Product LineTeamLinux | explore have announced the immediate availability of a complete product line including six new units. Ranging in suggested base price from $1,499 to $6,500, the kiosks are designed for a wide variety of business environments and offer a selection of optional feature packages to suit the multimedia and transactional needs of users. The TeamLinux | explore's new kiosk line incorporates multiple performance and peripheral options including touch screens, printers, magnetic card devices, modems, keyboard and pointing devices, videoconferencing capabilities, and wireless connectivity.

IBM Offers KDE Tutorial

IBM Offers KDE TutorialIBM has added a free tutorial on desktop basics using "K Desktop Environment" or KDE to its growing collection on the developerWorks Linux Zone. This tutorial will teach Linux users of ever level to customize thier own KDE GUI. Released February 26, KDE 2.1 addresses the need for an Internet-enabled desktop for Linux.

Linux Breakfast

Linux BreakfastTimes N Systems is hosting a technology breakfast series and would like to invite you. Their technology focuses on IP-SAN and storage virtualization...and works well with Linux.

The breakfast is educational and they have got Tom Henderson from Extreme Labs coming to speak. RSVP online.

Linux Links

Linux LinksPython

Bad economy is good for open source.

Microsoft denounces open source.

mamalinux is one of Montreal's largest Linux portals.

May 9 (1996) was the day that Linus Torvalds said he wanted a penguin to be the mascot for Linux... a cute and cuddly one at that... :). So, belatedly, you can view A Complete History of Tux (So Far) as a kind of birthday celebration.

FirstLinux.com are watching TV with Zapping under Linux.

A correspondent has recently written an article showing how Xalan-J can be used in a Java servlet to perform XSL transformations and to output HTML and WML. Perhaps of interest.

ssh 2.9

ssh 2.9ssh 2.9 has been released. Thanks to LWN for the story.

Aladdin StuffIt: Linux and Solaris Betas

Aladdin StuffIt: Linux and Solaris BetasAladdin Systems, Inc. unveiled public beta versions of StuffIt its compression technology , and StuffIt Expander, a decompression utility, for Linux and for Sun's Solaris operating systems. StuffIt for Linux and Solaris can be utilized to create Zip, StuffIt, Binhex, MacBinary, Uuencode, Unix Compress, self-extracting archives for Windows or Macintosh platforms and it can be used to expand all of the above plus tar files, bzip, gzip, arj, lha, rar, BtoAtext and Mime. The StuffIt public beta for Linux can be downloaded at www.aladdinsys.com/StuffItLinux/, and StuffIt for Solaris beta can be downloaded at http://www.aladdinsys.com/StuffItSolaris/.

XFce

XFceXFce is a GTK+-based desktop environment that's lighter in weight (i.e., uses less memory) than Gnome or KDE. Applications include a panel (XFce), a window manager (XFwm), a file manager, a backdrop manager, etc. Version 3.8.1 includes drag and drop, and session management support. Several shell scripts are provided as drag and drop "actions" for panel controls (e.g., throw a file into the trash, print a file). All configuration is via mouse-driven dialogs.

Heroix Announces Heroix eQ Management Suite for Linux

Heroix Announces Heroix eQ Management Suite for LinuxHeroix Corporation have released the Heroix eQ Management Suite, which unifies management of Windows 2000, Windows NT, Unix, and Linux Systems. The new product family improves the performance and availability of eBusiness and other critical applications by unifying monitoring and management of multiplatform computing environments.

New Product Brings Computation to the Web

New Product Brings Computation to the WebWolfram Research Inc. is pleased to announce the upcoming release of webMathematica, which is a solution for including interactive computations over the web. While not yet officially released, it is currently available to select customers under the preview program.

There has been significant interest in webMathematica during the testing phase, resulting in several new partnerships for Wolfram Research. Select banks, engineering firms, and other institutions are already using webMathematica. See http://library.wolfram.com/explorations for examples of possible webMathematica applications.

Lutris To Speed Development of Web and Mobile Applications with Hewlett Packard

Lutris To Speed Development of Web and Mobile Applications with Hewlett PackardLutris Technologies Inc., a provider of application server technology for wired and wireless development and deployment, has announced a sales and marketing agreement with Hewlett-Packard to deliver Lutris Enhydra 3.5 to HP's customers for developing and deploying enterprise-level applications on HP Netserver systems running Linux and Windows 2000. The solution will enable the creation and deployment of Internet and wireless Web applications.

QuickDNS 3.5 for Linux

QuickDNS 3.5 for LinuxReykjavik, Iceland-- Men & Mice release QuickDNS 3.5, a comprehensive DNS management system for Linux systems. QuickDNS is the leading DNS management system for Mac for nearly 5 years. QuickDNS on Linux will enable simultaneous management of DNS servers on different platforms, using an easy-to-use interface. Setting up QuickDNS is simple as it runs on top of BIND 8.2.x.

QuickDNS 3.5 retails for $495 for one licence and $790 for a pack of two licences. Free downloads are also available

Opera News

Opera NewsOpera Software and Google Inc., developer of the award-winning Google search engine, have signed a strategic agreement under which Opera will integrate Google's advanced search technology into its search box feature on the Opera Web Browser. Available now, direct access to Google's search technology enables Opera users to quickly search and browse more than 1.3 billion Internet pages.

Opera Software have launched a new version of Opera for Linux. Opera 5 (final) offers an Internet experience for the Linux platform as hassle-free as on Windows. Today's release affirms Opera Software's leadership in cross-platform browser development.

In addition to the usual Opera features such as speed, size and stability, users will find exciting features not yet implemented in the Windows version. The extensive customization possibilities for user settings, additional drag-and-drop features and the Hotlist search function are features only available in the Linux version. An add supported version of Opera 5 is available for free download.

New Free Xbase Compiler: Max 2.0

New Free Xbase Compiler: Max 2.0PlugSys have announced availability of Max 2.0 Free Edition, the 32-bit Xbase compiler for Linux and Windows providing free registration to application developers worldwide. Using classic Xbase commands and functions, Max developers write character-based applications that access data from FoxPro, dBASE and Clipper. To ensure scalability, Max also connects to all popular SQL databases. The product can be downloaded from the PlugSys.com web site.

Loki Games and Nokia

Loki Games and NokiaNokia and Loki have formed an Agreement to Distribute Linux Games with the Nokia Media Terminal, a new "infotainment" device that combines digital video broadcast, gaming, Internet access, and personal video recorder technology. As part of the agreement Linux-based games from Loki will be pre-installed on the Media Terminal. Anticipated roll out of the Media Terminal will be early Fall in Europe. Nokia is demonstrating the Media Terminal and will show the ostdev.net open source network at the E3 exhibition in Los Angeles 16-19 May.

Loki Software, Inc. have announce that MindRover: The Europa Project for Linux will ship on Wednesday, May 23. MindRover from CogniToy is the 3D strategy/programming game enabling players to create autonomous robotic vehicles and compete them in races, battles and sports. MindRover has an SRP of $29.95, and is now available for preorder from the new Loki webstore. A list of resellers is also available.

VMware/Cisco Mutliple OSs on a Single Box and Streaming Media

VMware/Cisco Mutliple OSs on a Single Box and Streaming Media Version 0.7.1 of OSALP Available

Version 0.7.1 of OSALP AvailableBeta release 0.7.1 of the Open Source Audio Library Project has been released (Linux, Solaris Sparc, and FreeBSD). The OSALP library is a C++ class library that provides the functionality one needs to perform high level audio programming. The base classes allow for building audio functionality in a chain. The derived classes support such functions as audio editting, mixing, timer recording, reading, writing, and a high quality sample rate converter. New in the 0.7.1 release is support for FreeBSD, numerous bug fixes, new Makefile system, and a new mp3 reader module based on the open source splay library.

Other software

Other software The Answer Gang

The Answer Gang

There is no guarantee that your questions here will ever be answered. You can be published anonymously - just let us know!

Greetings from Heather Stern

Greetings from Heather SternFor those of you who've noticed this ran late, sorry 'bout that! I had a DSL outage ... in fact, if it had just plain died it might have been easier, since I would have known to reach for a backup plan.

But, things are all better now. Boy have I got a new appreciation for the plight of those stuck behind a slow dialup line. Ouchie. Now we have a brand new router and a freshly repaired external DSL drop.

Okay, enough of that. I want to give a big hand of appluase for the new, improved Answer Gang. The Gang deserves a giant standing ovation is that over 400 slices of mail passed through nthe box this month. That's about twice as many as the month before... and a lot of people got answers ![]()

As always I remind you that we can't guarantee that you'll get one... and nowadays I can't even manage to publish all the good ones. I stopped pubbing the short-and-sweet FAQs a few issues ago.

We have some summary bios. Not everyone -- some of us are shy -- but now you can know a few of the Gang a bit better.

Last but not least, there's a big thanks to my Dad in there. Enjoy!

Need a Free StarOffice Killer

Need a Free StarOffice KillerFrom Amil

Answered By Thomas Adam, Heather Stern

Hi,

I would like to know which is an alternative for staroffice5.2 . i need all word excel powerpoint in one package which acts as an substitute for star office . moreover the package should be freely available in net

Regards

Anil

[Thomas] Hi Anil,

I believe that the only package that would offer what you wanted would be the commercial product ApplixWare......

HTH,

Thomas Adam (The Linux Weekend Mechanic)

[Heather] Pretty tall order, looking for an MS clone and not Star Office. Try its source version, OpenOffice (http://www.openoffice.org). Thomas is right that Applix is the nearest competitor. You can try demos of that in several distros.

You mention MS' products by name so if you hope for file exchangeability, Siag Office won't be a usable substitute. If you don't care about that, don't limit yourself to a bundled office.

There are plenty of shots at word processors (some of them even pretty good, regardless of my editorial rants), more spreadsheets than I dare count, and a presentation package or two, available "unbundled" (the Gnome and K environments don't require you to get all of their apps) but again, their talents at handling MS' proprietary formats are severely limited.

Abiword is free and able to give Word files a half-decent shot at loading up. If you stick with RTF exports, a lot more things would work, but I know MS doesn't export everything useful when they do that. It doesn't export virii that way either

Xess looks to me to be the best Excel clone for Linux, but is also a commercial app. It will definitely read Excel files.

For Powerpoint, well... Magicpoint won't read it. Magicpoint is a decent presentation program, but designed to be much simpler, and let you embed cool effects by "swallowing" running app windows. It's very much designed for X rather than anything else. On the plus side, its files are tiny, since they're plaintext (albeit with a layout). I don't know any free source software offhand that I know loads Powerpoint slides.

If none of those are good enough, expect to pay commercial prices for commercial quality work. "Demo" does not mean "excuse to rip off the vendor" it means "chance to try the product before buying it if you like it". The "freedom" in open source work is about being able to use and improve tools long after their original vendors/authors have ditched them, not about putting the capitalist economic system on its ear.

pps files

pps filesFrom adrian darrah

Answered By Ben Okopnik, Karl-Heinz Herrmann

Hello, I've been sent a "pps" file from someone at his place of work. Can you advise where best to download the necessary software from internet source to open such a file.

Many thanks Adrian Darrah

[] From:

http://www.springfieldtech.com/HOW_DO/File_type.htm

I get

.PPS MS Power Point Slide Show file

So this is probably a propietary Microsoft Power Point file. I was just going to say that _free_ and M$ don't go well together, but there seem to be some Linux projects:

- Magic Point:

- http://www.freeos.com/articles/3648

Though This looks like a "Poer Point" replacement I can't find a comment stating it will read/use PPS files.

[Heather] It doesn't. Its own format is plaintext and many cool effects are generated by instructing it to run X apps "swallowed" within its own window. The only relation is the word "Point" and they're both presentation apps.

[Karl-Heinz] It seems StarOffice can open power point files (.ppt) maybe also pps ones. http://www.pcs.cnu.edu/linux/wwwboard/messages/283.html

[Dan] I've had pretty good luck viewing Power Point presentations with StarOffice.

[Karl-Heinz] All other search engine results ( http://www.google.de ) concerned the power of some floating point number.....

My conclusion would be: Get a different file format or MS Power Point if you have to use that files, Star Office would maybe be an option.

[Heather] I found list archives indicating that Applix also works, and a lost reference to a German site (http://www.lesser-software.com) that might once have had an effort towards one in Squeak or Smalltalk, but there was no download link and I don't read German. Oh yeah, and a bazillion sites pointing at the MSwin or Mac PPT viewer, with Linux mentioned in their footer or sidebar. Sigh.

Checks vs. Plain Paper

Checks vs. Plain PaperFrom James McClure

Answered By Dan Wilder, Jim Dennis, Ben Okopnik, Heather Stern, Mike Orr

I need to find a way to print to specific forms, such as checks, invoices, etc. Whenever my accounting people get ready to print out checks, it never fails that someone will send a print-job to the printer. It will then be printed on the checks instead of normal paper. Is there a way to accomplish this through LP... from what I've tried, read, and heard... I've had NO LUCK!

Any help is appreciated!

James McClure

[Dan] You did not mention what operating system you're using.

![]() Apologies... I'm running RedHat 6.1 (Kernel 2.2) with LPD.

Apologies... I'm running RedHat 6.1 (Kernel 2.2) with LPD.

James

There's at least 4 types of possibile answer...

0. Have a seperate printer, then you don't need this question.

[Ben] The "real" solution is to have a separate check printer; anything less is going to require juggling, and anything we can suggest here that's short of that is going to be painful in some way. <shrug> That's Life with Band-Aids for you.

[Dan] In the Bad Old Days of twenty different pre-printed forms on the shelf, everybody's mini-mainframe had forms management built in to the OS. It didn't seem so awful to us then. It certainly beat spending umpteen thousands of dollars a month to lease twenty different printers, most of them seldom used, from IBM. Not to mention the impact of the 3' by 4' by 4' form factor!

If you were printing more than one pre-printed form, say, checks drawn against five different accounts, each once a month, having five dedicated printers sitting idle most of the time, and a sixth for everyday use, would seem maybe just a little wastful of the equipment budget.

1. Construct a scripted front end to help you handle it:

[Dan] This one begs for a forms management interface program. With a long-running interface program on the system console that would display, for example,

- On printer laz insert form "company 1 checks" and hit ENTER

- [ prints check job after ENTER ]

- On printer laz insert form "plain letter-size paper" and hit ENTER

- [ prints the plain paper job ]

[JimD] Yuck! A console requires babysitting.

[Dan] The printer requires babysitting anyway. When you change forms. If the console's next to the printer, there's no additional work to speak of.

[Dan] The application would be run as the login shell of a printer control user, who would normally be logged into some terminal near the printer. It would assume some default form at its startup time, and merrily release print jobs so long as they call for the available form, holding the print queue when the next job up calls for a form that is not currently inserted.

I'm unaware of anything quite so friendly for Linux forms handling. Instead, as you've observed, we have lpd.

Have you investigated using the lpc command for this? By doing

lpc

holdall laz

[ run the check job ]

lpq

[ lists print jobs held. Figure out which one is your check-printing batch. ]

release jobid

[ releases the job with id "jobid" for printing ]

release laz

[ releases all remaining jobs, after special forms jobs are finished ]

You'd have to set up sudo to allow selected users to run lpc.

2. Try to use the queues feature built into lpr:

[JimD] The classic approach to this problem is to create additional queues on that printer. When you mount a form on a given printer, you use your printer system's control utility (lpc under Linux) you stop printing of all queues, and enable printing of just the one that relates to the currently mounted form. After, you dismount the special paper (checks, pre-printed forms, etc) you stop the form queue and start the general queue(s).

[Dan] ff printcap entry "check" has the autohold flag ":ah:" in it, so jobs sent to it are normally held. After running checks using

lpr -Pcheck

you'd use lpc:

lpc

holdall laz

[ wait until printing on "laz" stops, then change forms ]

release check

[ or 'lpq' then 'release jobid' ]

[ wait until check printing stops ]

holdall check

release laz

This saves having to guess which jobs are checks.

[JimD] Note that you can stop printing of a queue without disabling submissions to it. Thus your other printing traffic will continue to queue up while the special forms are loaded. When you reload the normal paper, the other jobs will all get printed as normal.

[Dan] With the ":ah:" flag, you might not need to "holdall check" at the end; I'm not sure whether "release check" applies only to jobs currently in the spool, or to future jobs also.

[JimD] This is the whole reason why the BSD lpd supports multiple queues connected to any printer. It gives you some administrative flexibility. You can use it to support forms and special papers (colors, sizes, etc). You can also use it to (very roughly) manage priorities (so you time critical monthly, quarterly, or annual accounting jobs can be give abolute priority over other printing traffic for a few days, for example).

Of course you can use lpc in shell scripts to automate the work of stopping and starting specific queues.

[Dan] Note the (admittedly confusing) lpr man page calls a printcap queue (declared with -P) a printer.

[Heather] Think "virtual printer" if it works better for you...

[JimD] None of this is as easy as we'd like. There are commercial packages which purport to offer "friendlier" and "easier" interfaces to printer management under Linux. I've never used any of them, nor have I played with CUPS or recent versions of LPRng. I've just managed to get by using the plain old BSD lpd, so far.

[Mike] Have you been able to do this without getting "cannot open /dev/lp0" errors? (I don't remember the exact error message.) I have two printers, HP LJ 4L and Epson Stylus Color 600, which I switch back and forth on the parallel port. Sometimes I have to bring down the LPD daemon entirely (or sometimes even reboot) in order to switch from one to the other.

[Dan] The topic was multiple virtual printers on the same physical device. It sounds like you're asking about multiple physical printers on the same hardware port.

[Mike] He and I both have two "drivers" (LPD stanzas) going to the same device. The difference is that he has one printer on the other side of the device, while I switch printers. But it's not switching printers that causes the "device in use" error; it also happens if I forget to switch the printers. Thus, why it could happen to Mr McClure too. Apparently LPD (from LPRng) doesn't close the device in a timely manner after finishing a print job, so that another driver can use the same device.

[Dan] I can probably concoct at least three other ways to do it.

The disadvantage of "start check" is you must remember to "stop check" when you're done. By using the ":ah:" flag you _might_ not have to remember one more step at the end.

Darned if I can see from the documentation what the functional difference between "stop printer" and "holdall printer" is. Both appear to allow queuing, while holding print. "holdall" doesn't appear to apply to current jobs, so the default non-check printer might finish printing more stuff after "holdall lp" (or whatever name is used for that printer) than "stop lp".

So maybe the perfect sub-optimal solution is:

lpc stop lp [ wait for current job to finish ] [ insert check forms ] start check [ wait for checks to print ] stop check start lp quit

or a setuid CGI that issues equivalent commands.

[Ben] <gag><choke><choke><gasp>

[JimD] Something more friendly than this could be cooked up as a simple set of shell scripts that were activated by CGI/PHP web forms.

[Heather] There's a CGI front-end for LPRng called LPInfo.

[Dan] But, I don't much like setuid CGIs.

[Ben] <understatement value="annual"> Gee, me either. </understatement>

I'd probably try the first solution suggested above, then set up some scripting stuff to save steps once the "easiest" procedure has been finalized. The "real" solution is to have a separate check printer; anything less is going to require juggling, and anything we can suggest here that's short of that is going to be painful in some way. <shrug> That's Life with Band-Aids for you.[Dan] In the Bad Old Days of twenty different pre-printed forms on the shelf, everybody's mini-mainframe had forms management built in to the OS. It didn't seem so awful to us then. It certainly beat spending umpteen thousands of dollars a month to lease twenty different printers, most of them seldom used, from IBM. Not to mention the impact of the 3' by 4' by 4' form factor!

If you were printing more than one pre-printed form, say, checks drawn against five different accounts, each once a month, having five dedicated printers sitting idle most of the time, and a sixth for everyday use, would seem maybe just a little wastful of the equipment budget.

[Dan] I still think the optimal solution is a forms control app run as the login shell of a printer control user.

But then, I always put a monitor someplace near each printer. Often a plain old text console.

3. Or you can see if one of the new printing systems makes it easier than we described here:

[JimD] However, it's definitely worth looking at the alternatives, so I'll list a couple of URLs that relate to printing under Linux (most of which will also be relevant to any other form of UNIX):

- The Linux Printing HOWTO

- http://www.linuxdoc.org/HOWTO/Printing-HOWTO/index.html

This has a section on spooling software which, naturally enough, includes links to the major free spooling packages.

- The Linux Printing Usage HOWTO

- http://www.linuxdoc.org/HOWTO/Printing-Usage-HOWTO.html

[Heather] This one's dusty and has some things just plain wrong (the PDF stuff, for example; xpdf is not an Adobe product at all) but it has an okay introduction to the bare lpr commands, if you have to go there. With any of the front-end systems below for printing, you might not need it:

- The LPRng HOWTO:

- http://www.astart.com/lprng/LPRng-HOWTO.html

Hey, check it out, you can specify job classes, so you could actually tell the single printer that it only has plain paper in it right now, so hold all jobs that are of the check class. I'm sure this can easily be extended to letterhead or other special forms. The tricky part is to have your check runs properly register that they are of the "check" class so this would work.

- [Mike] CUPS: the Common Unix Printing System

- http://www.cups.org

- PDQ: Print, Don't Queue

- http://pdq.sourceforge.net

- Links to these and more on the Linux Printing site.

- http://www.linuxprinting.org

![]() Thanks for your help!

Thanks for your help!

James

[Ben] Hope it does some good.

[Heather] You're welcome, from all of us!

Free Business Consulting: NOT!

Free Business Consulting: NOT!From Abdulsalam Ajetunmobi

Answered By Jim Dennis

Dear Sir,

I am a Computer Consultant based in London, United Kingdom. I am, in conjuction with two other partners, making enquiry on how to set up Internet Service as a busness outfit in line with the estbalished ones like AOL, Compuserve etc. Our operation will be based in Africa.

Could you kindly advise me of what it entails and the modality for such a business. I would like to know the required equipment, the expertise and possibly the cost.

Thanks for your co-operation.

Yours faithfully,

Abdulsalam Ajetunmobi

[JimD] The Linux Gazette Answer Gang is not a "Free Business Consulting" service. We volunteer our time and expertise to answer guestions that we feel are of interest to the Linux community.

It is true that Linux is ubiquitously used by ISPs as a major part of their network infrastructures. Actually FreeBSD might still have a bit of an edge over Linux. It's true that free UNIX implementations have grown to dominate the once mighty SunOS and Solaris foothold in that field.

Microsoft's NT gained some ground among ISP startups in the nineties; but lost most of that to their own instability, capacity limitations and pricing. NT at ISPs now exists primarily to support customers who demand access to Microsoft's proprietary FrontPage extensions or other proprietary protocol and service offerings.

So some might claim that your question is indirectly "about Linux." Of course that would be like saying that questions about setting up a new automotive dealership are "about automotive mechanics."

Here's my advice: if you don't know enough about the "modality" of the ISP business, if you have to ask us what setting up an Internet service entails, then you aren't qualified to start such a business.

First, the basic technical aspects of setting up an internet service should be obvious to anyone who as used the Internet. You need a persistent, reliable set of high speed and low latency connections to the Internet. (Duh!) You need some equipment (web servers, name servers, mail exchangers and hosts, routers, hubs, and some sort of administrative billing and customer management systems --- probably a database server). You need the technical expertise to manage this equipment and to deal with the vendors (mostly telcos; telephone service companies and other ISPs) that provide you with your Internet services.

Some elements that are non-obvious to casual Internet users are: ISPs are loosely arranged in tiers. Small, local ISPs connect to larger regions ISPs. Regional ISPs perform "peering" with one another and with larger, international ISPs. Some very large ISPs (like AOL/Compuserve and MSN, etc) get to charge hefty peering fees from smaller and intermediate ISPs. When you link up with "podunk.not" they often only have one connect to one "upstream" provider. A better "blueribbon.not" might have a couple of redundant POPs (points of presence) and a redundant links to a couple of upstream providers.

Now, the business requirements (for any business) depend on a detailed understanding of the business at hand. You have to know how to get the service or product on the "wholesale" side, possibly how to package and/or add value to that service or product, and how to re-sell it to your customers. If you don't know the difference between a third tier ISP and a backbone provider; you don't know enough to formulate a sensible business plan in that industry. If you don't have contacts in that industry and in your market segment within that industry then you should seriously ask what possible advantage you could have over your competitors.

(Don't start any business without an advantage. That makes no sense. If you don't truly believe in your advantage --- go work for someone who does have one).

Perhaps you think that you won't have any competitors in Africa; or that you have some business angle that none of them have. Great! Now go find and hire someone who knows that business in that market. Then you can do your own feasibility study to see if there are real opportunities there.

Keep in mind that you are likely to need professional contacts in the regional governments where you intend to operate. Throughout most of the "third world" there is quite a bit of overt corruption --- and outright graft is just a part of doing business in most places outside of the United States and western Europe. Don't get me wrong, I'm not saying that the governments and bureaucracies in Africa are more corrupt than those in the U.S. --- just that the corruption is more overt and the graft is more likely to be direct cash, rather than through the U.S. subtefuges of "campaign contributions" and various other subtleties.

Anyway, if you don't like my answer keep in mind that this question is basically not appropriate for this forum. Other readers will probably flame me and call me a racist for my comments about the customs in other countries. Oh well. I'll just drop those in /dev/null. (Rational refutations; pointing to credible comparisons or independent research would be interesting, though).

Success and Horror Stories

Success and Horror StoriesFrom Faber Fedor

Answered By Jim Dennis

Anyone know where I can find success/horror stories about setting up and running VPNs under (Red Hat) Linux? I've got all the HOWTOs, tutorials, and theory a guy could want. I've even heard rumblings that a Linux VPN isn't "a good business solution" but I've not seen any proof one way or another.

TIA!

[JimD] It would be really cool if crackers had a newsgroup for kvetching about their failures. Then their horror stories might chronicle our successes.

However, there isn't such a forum, to my knowledge. Even if there was, it would probably not get much "legitimate" traffic considering that crackers thrive on their reputation for successful 'sploits. They'd consider it very uncool to catalogue their failures for us.

Aside from that any forum where firewalls, VPNs and security are discussed is likely to be filled with biased messages and opinions. Some of the bias is deliberate and commercially motivated ("computer security" is a competitive, even cut throat, business). In other cases the bias may be less overt. For example the comp.security.firewalls attracts plenty of people with a decided preference for UNIX. I don't see any recent traffic on comp.dcom.vpn (but that could be due to a dearth of subscribers at my ISP --- which dynamically tailors its newsfeeds and spools according to usage patterns).

I would definitely go to netnews for this sort of research. It tends to get real people expressing their real preferences (gripes especially). Most other sources would be filled with marketing drivel and hype, which is particular prevalent in the fields that relate to computer security, and encryption.

(I visited the show floor at the RSA conference in San Francisco last month. It was fascinating how difficult it was for me to figure out whether each company was hawking services, software or hardware --- much less actually glean any useful information about their products. Talk about an industry mired in vagary!)

Incidently the short answer regarding the question: "What are my choices for building a VPN using Linux systems" comes down to a choice among:

- FreeS/WAN (Linux implementation of the IETF IPSec standards)

- http://www.freeswan.org

- CIPE (Crypto IP Encapsulation over UDP)

- http://sites.inka.de/~W1011/devel/cipe.html

- VTun

- http://vtun.sourceforge.net

- vpnd

- http://sunsite.dk/vpnd

- PoPToP (MS PPTP compatible)

- http://poptop.lineo.com

There are probably others. However, I've restricted my list to those that I've heard of, which have some reasonable reputation for security (actually the PPTP protocol seems to be pretty weak, but I've included PoPToP in case a requirement for Microsoft compatibility and an aversion to better MS compatible tools overrides better judgment). I've only listed tools which are able to route TCP/IP traffic (rather than including application specific single connection "tunnels" --- which would be adequate for some applications but which don't constitute a "VPN").

I specifically left out VPS (a project that used PPP through ssh tunnels). This approach was useful in its day (before FreeS/WAN was released and while CIPE et all were maturing). However, the performance and robustness of a "PPP over ssh" approach was just barely when I was last using it with customers. I've recommended that they switch.

Normally I'd recommend the Linux Documentation Project (LDP) HOWTOs. However, this is one category (http://www.linuxdoc.org/HOWTO/HOWTO-INDEX/networking.html#NETVPN) where the LDP offerings are pretty paltry (I should try to find time to contribute more directly there). In fact the VPN HOWTO (http://www.linuxdoc.org/HOWTO/VPN-HOWTO.html) suggests and describes the VPS (PPP over ssh) approach (though it doesn't use the VPS software package, specifically). I've blind copied the author of that HOWTO on this, in case he feels like updating his HOWTO to point at the most recent alternatives for this.

The other HOWTOs in this category relate to running FreeS/WAN or CIPE behind an IP masquerading router (or Linux box), and using PPP over a telnet/tunnel to "pierce" through a firewall.

Hope that helps. There isn't much in the way of "easy to use" prepackaged VPN distros, yet.

RE: Linux Dialin Server

RE: Linux Dialin ServerFrom Kashif Ullah Jan

Answered By Karl-Heinz Herrmann

Pls provide info regarding DIAL-IN SERVER for Linux with CALL BACK Facility.

[] this is highly depending on how your call back server is configured.=20 So some more informations would help in helping you.

I have access to a call back server here. It is setup to dial back to me, but it will act as server, i.e. it will insist on choosing the IP and everything. It also will not authenticate itself properly (or I couldn't figure out how), but I have to authenticate myself to the call back machine as if I would login there.

basically you need some program which is listening to your modem and acts o= n connections. I use mgetty which even has a auto ppp detection mode. http://www.leo.org/~doering/mgetty

A properly configured mgetty listening on the modem will not disturb outgoi= ng connections. Only when the modem is free again it will start listening for incoming calls.

Then you will have to setup pppd so incoming calls as "autoppp" will authenticate themselfs correctly to the call back server. Thats basic pppd setup with pap secrets here, but can be different for you.

If you have more specific questions I can try to help you along.

K.-H.

hello gang out there, another sendmail case :>

hello gang out there, another sendmail case :>From Piotr Wadas

Answered By Faber Fedor

I have the following sendmail problem: For backup purposes I boss ordered me to force sendmail to make carbon or blind copy of each mail (which comes in, out, or which is to be relayed through box) to specified account.

[Faber] I personally spent three weeks trying to figure out how to do this. After much research, gnashing of teeth and pulling of hair (and finally consulting an email guru/colleague), the answer is "You can't do that in sendmail".

![]() While browsing sendmail docs all I found was some mysterious sounds about any sendmail 'scripting language' which supposed to be called "Milton" or "Miller" or something like that, which allows that feature, and is to be installed by patching sendmail and re-compiling it.

While browsing sendmail docs all I found was some mysterious sounds about any sendmail 'scripting language' which supposed to be called "Milton" or "Miller" or something like that, which allows that feature, and is to be installed by patching sendmail and re-compiling it.

[Faber] I looked into that, and that requires you to write your rules in the C programming language, IIRC.

![]() But I feel there must be a simpler rule to do this - maybe by rewriting some "From:" and "To:" envelopes or something?

But I feel there must be a simpler rule to do this - maybe by rewriting some "From:" and "To:" envelopes or something?

[Faber] You'd think so, wouldn't you. One fellow had an example of a sendmail.cf rule that supposedly will do what you describe, but I never found anyone who actually got it working.

![]() Are you familiar with such problem?

Are you familiar with such problem?

[Faber] Intimately

.

However, there is an easy solution: install postfix. Postfix is a "drop-in replacement" for sendmail, i.e. any programs that already rely on sendmail will continue to work without any changes on your part.

To do what you want with postfix is done simply by adding one line to a configuration file. And, there are two nice howtos (written my the above-mentioned mail guru) that you can read at

http://www.redhat.com/support/docs/howto/RH-postfix-HOWTO/book1.html

and at

http://www.moongroup.com/docs/postfix-faq

(they assume you're running Red Hat and using RPMs, but it still legible.

Linux Kernel Crashdumps: HOW?

Linux Kernel Crashdumps: HOW?From Sachin

Answered By Jim Dennis

Hi All,

How do we configure dump device on linux( SuSE 7.1 ) so that when system panics I can get kernel crash dump.I have two scsi disks and want to use one of the scsi disk as dump device.

Thanks,

Sachin

[JimD] Linux doesn't crash. (Well, not very often, anyway).

More to the point, the canonical Linux kernel doesn't include "crashdump" support (where a kernel panic dumps the system core state to the swap partitions or some other device). Linus doesn't consider this to be a sufficiently compelling feature to offset the increased code complexity that it entails. (Linux also doesn't panic as easily as some other UNIX kernels --- it will log an "Oops" for those hardware errors or device driver bugs that are considered "recoverable").

However, if you really want this feature, you can apply the "lkcd" (Linux Kernel Crash Dump) kernel patches from SGI's OSS (Open Source Software) web site at:

http://oss.sgi.com/projects/lkcd

You'll also want to grab the suite of utilities that goes with the kernel patch. The vmdump command configures the kernel to use its dump feature (telling it which swap partition to use for example) and another vmdump directive is normally used to detect and save dumps. (If your familiar with the 'savecore' command in some other forms of UNIX, then this will make sense to you).

There's also an 'lcrash' utility which is used to help perform crashdump analysis.

Note that there are a number of other "unofficial" kernel patches like this one. For example there are interactive kernel debuggers that you can compile into your system's kernel.

You can read about some of them at:

http://oss.sgi.com/projects

... and find more at:

- Rock Projects Collection (takes over where Linux Mama left off)

- http://linux-patches.rock-projects.com

LinuxHQ http://www.linuxhq.com/kernel (Look for the links like "Unofficial kernel patches").

- IBM ("Big Blue")

- http://oss.software.ibm.com/developer/opensource/linux/patches/kernel.php

(Mostly small, deep performance tweaks and bugfixes, and simple feature enhancements).

Ibiblio (formerly Metalab, formerly Sunsite.unc.edu) http://www.ibiblio.org/pub/Linux/kernel/patches!INDEX.html (Mostly very old).

- Adrea Arcangeli (et al)'s In Kernel Debugger:

- ftp://e-mind.com/pub/andrea/ikd

- The International/Crypto Support Patch

- http://www.kerneli.org

- FreeS/WAN IPSec (includes some patches which aren't at kerneli)

- http://www.freeswan.org

- Solar Designer's Security Features Patches

- http://www.openwall.com/linux

- ... and some additions to that from "Hank":

- http://www.doutlets.com/downloadables/hap.phtml

- ... and the "Linux Intrusion Defense/Detection System"

- http://www.lids.org

(which mostly incorporates and builds upon the Openwall patches and lots more)

- U.S. National Security Agency's "Security Enhanced" Linux

- http://www.nsa.gov/selinux/download.html

(Yes, you read that right! The secretive "no such agency" has released a set of open source Linux patches. Everybody's getting into the Linux kernel security patch game!)

- ... and even more

- http://www1.informatik.uni-erlangen.de/tree/Persons/bauer/new/linux-patches.html

(Links with some duplicates to the list I've created here).

I've deliberately left out all of the links to "real-time" kernel patches. (I think I created a link list for an answer that related to various forms of "real-time" Linux (RTLinux, RTAI, KURT, TimeSys.com et al) within the last couple of months. (Search the back issues for it, if you need more on that).

So, obviously there are alot of unofficial kernel patches out there.

One reason I went to the bother of list all these sites, is that I'm guessing that you might be doing kernel development work. Linux kernels just don't crash very often in production use so that seems like the mostly likely reason for anyone to need crash dump support. (Besides, it'll amuse the rest of my readership).

Among these many patches you may find good examples and useful code that you can incorporate into your work.

Homework assignment: define these Linux terms

Homework assignment: define these Linux termsFrom Maria Alejandra Balmaceda

Answered By Karl-Heinz Herrmann

i would like to know if you can define to me this words:

[K.H.] I can try at least some of them:

![]() Linux UNIX

Linux UNIX

[K.H.] UNIX is an operating system developed around 1969 from Bell Labs according to: http://minnie.cs.adfa.edu.au/Unix_History

- another history overview is on:

- http://perso.wanadoo.fr/levenez/unix

Since then many clones and reimplementations of very similar Operating systems have been released. Most of them were developed by some company and sold running on their hardware (HP unix, IBM 's AIX, Dec OSF, Cray unicos, ....).

Another one of them is Linux -- a Unix kernel rewrite started as a project by Linus Torvalds with the remarkable difference that the Linux kernel was and is free -- free in the sense that everybody has access to the source and is free to redistribute it as well as modifying it.

Linus' work was made possible by another project: GNU. See below.